Stochastic Control

Recent research has focussed on developing algorithms that increase the level of autonomy of autonomous air and space systems. Examples include: controllers that can plan the future path of a team of UAVs, so that the path is optimal while avoiding obstacles or threat regions, planning algorithms that can schedule a sequence of tasks for a Mars rover, and fault detection algorithms for a deep space probe. A critical problem, however, which prevents the widespread adoption of these methods outside of simulation, is a lack of robustness. Existing control algorithms typically do not take into account the inherent uncertainty that arises in the real world, which can lead to catastrophic failure. Uncertainty can arise due to:

1. Disturbances that act on the vehicle (for example wind acting on a UAV)

2. Uncertain localization (for example visual odometry on the Mars Exploration Rover)

3. Uncertain system modeling

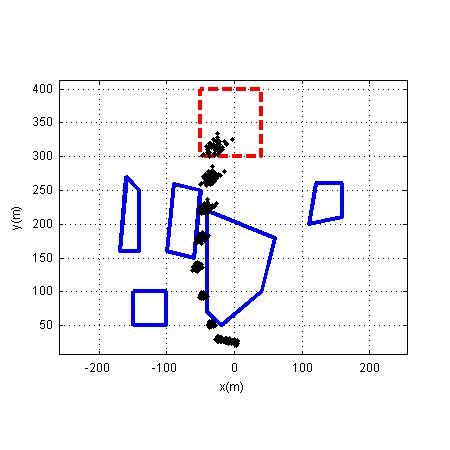

In the case of UAV path planning, an optimal controller that does not take into account uncertainty will plan a path that only just avoids obstacles. This plan is highly brittle, since even a small disturbance due to wind will cause collision, and catastrophic failure. There is therefore a need for control algorithms that are robust to uncertainty.

In my research, I have posed the problem of robust, optimal control as a constrained probabilistic control problem. The solution to this problem aims to find the best sequence of control inputs while taking into account uncertainty, so that the probability of failure is less than a specified value. There are two particularly powerful features of this probabilistic formulation. First, methods exist for describing uncertainty in localization, in modeling and due to disturbances, in a probabilistic manner. Second, by specifying a maximum probability of failure, the desired level of conservatism in a plan can be controlled. In a UAV path planning scenario, this means that for a very low probability of failure the controller will plan a path far from obstacles, while for a higher probability of failure the optimal path with be close to obstacles. The more conservative path will use more fuel, but will fail less often.

I have developed a number of methods that solve the robust probabilistic control problem using efficient constrained optimization techniques. The first method, which applies for Gaussian probability distributions, is able to solve for a robust sequence of controls in the same time as existing controllers that do not take into account uncertainty. This work is described here.

Particle-based Control Methods

With non-Gaussian probability distributions the problem becomes significantly harder. In the field of estimation, methods based on sampling, also known as "particle filtering", overcame this problem by representing probability distributions using a number of "particles". Drawing from these ideas, I have developed a method by which a distribution of particles can be controlled in an optimal manner to solve the robust control problem. The key idea behind this particle control method is to turn an intractable probabilistic control problem into a tractable deterministic one. I am currently applying particle control to create optimal robust path plans with non-Gaussian uncertainty, as described here.

Related Publications

"Convex Chance Constrained Predictive Control without Sampling. " L. Blackmore and M. Ono. In the proceedings of the AIAA Guidance, Navigation and Control Conference 2009.

"Robust Path Planning and Feedback Design under Stochastic Uncertainty." L. Blackmore. In the proceedings of the AIAA Guidance, Navigation and Control Conference 2008. Best Paper in Session.

A Probabilistic Particle Control Approach to Optimal, Robust Predictive Control. L. Blackmore. In the proceedings of the AIAA Guidance, Navigation and Control Conference 2006. Best Student Paper Award winner.